Surveys That Work

A Practical Guide for Designing and Running Better Surveys

Surveys That Work explains a seven-step process for designing, running, and reporting on a survey that gets accurate results. In a no-nonsense style with plenty of examples about real-world compromises, the book focuses on reducing the errors that make up Total Survey Error—a key concept in survey methodology. If you are conducting a survey, this book is a must-have.

Access extra content on Caroline’s website here.

Surveys That Work explains a seven-step process for designing, running, and reporting on a survey that gets accurate results. In a no-nonsense style with plenty of examples about real-world compromises, the book focuses on reducing the errors that make up Total Survey Error—a key concept in survey methodology. If you are conducting a survey, this book is a must-have.

Access extra content on Caroline’s website here.

Testimonials

Caroline has created a must-read ‘one-stop source’ for those looking to conduct a survey. Her book guides the reader through all the necessary stages for creating a robust survey, from beginning to end. The book can be used by experienced survey methodologists looking to improve the surveys they run and by those who are new to the survey world. Refreshingly, its clear and simple language makes survey methodology accessible to the masses, and its practical approach supports this. Importantly, it also helps the reader work out whether a survey is indeed the right choice—a step which is all too often overlooked. Helpfully, the book provides suggestions for additional reading for those who want to explore particular aspects of survey design further. Read this book—and learn from one of the best.

—Laura Wilson, Data Quality Hub Lead, UK Office for National Statistics

This book is a sharpening toolkit for taking haphazard surveys and making them useful. As research gets faster and faster, this book will help you keep pace with changing landscapes, especially in digital industries.

—Akil Benjamin, Strategy Director, COMUZI

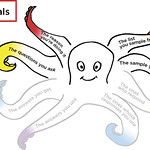

If surveys are one of the research techniques you use (or might use), you need this book! In an easy-to-read, conversational style, Caroline takes you through the entire process with wonderful examples, helpful stories, and tons of good advice. And where else would you have an adorable ‘Survey Octopus’ to remind you of all the elements you need for a successful survey and of how to minimize the various problems that can impact the value of your results?

—Janice (Ginny) Redish, author of Letting Go of the Words—Writing Web Content that Works

Those of us in the user experience design practice have needed this book for a long time. If you have avoided using surveys as a tool for understanding the needs of the people who use your products, shy away no more. Caroline’s mastery of method and her encouraging voice come through in every sentence. This book is a delight to read and use.

—Dana Chisnell, Fellow, Belfer Center for Science and International Affairs and Policy Designer, U.S. Digital Service

All praise for Surveys That Work and the Survey Octopus! This is crucial reading for all those who want to deepen their user-centered practice with quantitative research. A thorough exploration of all dimensions of the thinking process behind good surveys, this book will make you a better questioner!

—Misaki Hata, Service designer, NHS Digital

This book is delightful; I’m excited to share it with you. Caroline will help you know when to do a survey, and how to do it well. When we do good research, we can make good decisions!

—Kathryn Summers, Professor, University of Baltimore

Table of Contents

Foreword by Steve Krug

Chapter 0: Definitions Chapter: What Is a Survey? And the Survey Octopus

Chapter 1: Goals: Establish Your Goals for the Survey

Chapter 2: Sample: Find People Who Will Answer

Chapter 3: Questions: Write and Test the Questions

Chapter 4: Questionnaire: Build and Test the Questionnaire

Chapter 5: Fieldwork: Get People to Respond

Chapter 6: Responses: Turn Data into Answers

Chapter 7: Reports: Show the Results to Decision-Makers

Chapter 8: The Least You Can Do

FAQ

These common questions and their short answers are taken from Caroline Jarrett’s book Surveys That Work: A Practical Guide for Designing Better Surveys. You can find longer answers to each in your copy of the book, either printed or digital version.

- I see so many bad surveys—isn’t the best survey the one that’s not done at all?

Unfortunately, we are all bombarded with bad surveys. For example, someone in an organization decides that constantly blasting out questionnaires to every customer is a great way to get feedback. Their response rate is terrible, but they don’t consider that this poor response will simply create lots of errors—and annoyed customers. And since these bad questionnaires go to everyone, you’ve got a very good chance of seeing too many questionnaires—and many of them will be rotten ones. A bad survey gets you bad data. A bad application of any method gets you bad data.

Sample Chapter

This is a sample chapter from Caroline Jarrett‘s book Surveys That Work: A Practical Guide for Designing and Running Better Surveys. 2021, Rosenfeld Media.

Chapter 1: Goals: Establish Your Goals for the Survey

In this chapter, you’re going to think about the reason why you’re doing the survey (Figure 1.1).

By the end of the chapter, you’ll have turned the list of possible questions into a smaller set of questions that you need answers to.

Write down all your questions

I’m going to talk about two sorts of questions for a moment:

- Research questions

- Questions that you put into the questionnaire

Research questions are the topics that you want to find out about. At this stage, they may be very precise (“What is the resident

population of the U.S. on 1st April in the years of the U.S. Decennial Census?”) or very vague (“What can we find out about people who purchase yogurt?”).

Questions that go into the questionnaire are different; they are the ones that you’ll write when you get to Chapter 3, “Questions.”

Now that I’ve said that—don’t worry about it. At this point, you ought to have neatly defined research questions, but my experience is that I usually have a mush of draft questions, topic titles, and ideas (good and bad).

Write down all the questions. Variety is good. Duplicates are OK.