Day 3-Why Changing Hearts & Minds Doesn’t Work When Promoting DE&I Efforts, but Checklists Do

Thank you for the introduction and we are pumped to be here

-

Use lot of animated GIFs and have tried to avoid unexpected contrasts

-

Will lean on those in chat to look at certain content

— We can all say 2020 was a very interesting time

-

Witnessed unprecedented number of people taking to the streets

-

It felt like people were getting on board

-

— Saw brands with sponsored conversations, DEI statements, and focus on equity focused roles

-

What a time for the DEI enthusiast

— Wanted to bring design org with parity with rest of the org with DEI

-

We did a book club, media club, and Slack channel

— These efforts didn’t work. Excitement turned to burnout and we were stressed and not moving needle on goals

— Most importantly we couldn’t scale

-

Dominique Ward defines scale as doing less while having greater impact

-

We were doing the most on our team and little impact

-

— So what were the goals?

-

1) Change makeup of the design team

-

2) Provide access to jobs and salaries to those historically excluded

— Reflected on things we did

-

Had good attendance and engagement but didn’t equal success in terms of outcomes

— So people were talking, but when we asked “How have you seen this show up at org?”

-

There was radio silence when this was asked

— This set up problem that all we talked about was too removed from day-to-day work and organizational context

-

People were at remedial Race Relations 101, while we were asking for high-level analysis

— This was bolstered by data in our org and others

-

Study by HBR talking about DEI in workplace pointed out where BIPOC have more mistakes listed with feedback

-

Less leadership mentions for BIPOC versus white women

-

Women 11x more likely to be described as abrasive

-

— So we changed our focus from hearts and minds to tangible impact on company goals

— Two ways we did this:

-

Created objective evaluation criteria

-

Evidence-based performance reviews

— Before criteria, we’ll touch upon three group norms (from Michelle Bess), commitments made to the virtual space together

— Three group norms

-

Stay present and don’t check out of conversation, as much as possible

-

Be open, as we are offering new perpsectives, and resist the urge to dismiss what you are hearing.

-

Reflect on how this might work, and approach with curiosity

-

-

Sit with discomfort, and you can see yourself reflected in stories told

-

Sit with uncomfortable feelings and where they are coming from

-

— We’ll kickoff the discussion with a story

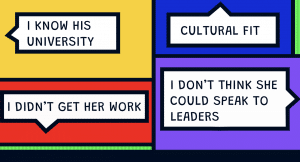

— We were hiring an early career reseracher org

-

We had a Black female candidate who had credentials from agency, with master’s experience

-

Our white female research lead passed on her, and we could understand this

— But to our suprise, she then had a portfolio review with white man who had no degree, and said to hire him

— I would say that’s pretty racist, but

— There was a lot of word salad thrown out to minimize what happened

— So we decided to do side-by-side comparison of the two candidates and this got me thinking on objective metrics

-

We were moving the goalposts on who was applying

— So we created 12 core competencies for design org

-

Not just product designers and researchers but analysts

-

Worked to talk through skills important for each capability

— Note that we don’t expect to have person be strong in all 12, but to have most important skills for capability representing and level in org

— We used example and demonstrated behavior for a skill like writing

-

i.e. writing macro and micro-copy

— What is the best way to measure them, and how the org would measure them

-

Encouraged people to share evaluation methods on Slack

— We used a scale for actions over perceived knowledge and how individual used the competency

-

Scale ranged from being completely unfamiliar with the competency to using it in new ways

–– How knowledgable you are often dpeends on the eye of the beholder

-

Might go to great school, but an interviewer can disregard that

— You can move from “I know” which is subjective, to “I can” which can be demonstrated

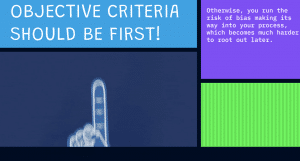

— So coming up with objective criteria is first thing to do

-

Without this, run risk of all biases running into processes

-

This is hard to fix after the fact

— We laid the foundation for future work we did

-

Filling out cheat sheet for guide to take basic hiring information and what you are looking for when hiring for someone

-

Pick-up required competencies (3 and at what level)

-

Behaviors in case studies and actions to demonstrate, along with sample discussion questions

-

Finally have archetype

-

-

Highlight three non-negotiables , and archetype to help sort out closely qualified candidates

-

We included a Figjam on work and joint notes for evaluating candidate and why might be good fit

-

— Creating objective criteria helped team be more expansive and helped people notice when they were looking for reasons not to hire someone

-

If person met basic competencies, they could pass on to next tier of interview

— This helped us ask right questions, and why this particular candidate and select this one and ask if bias is showing up

-

Great feedback on this to be clear about role for team

— Found objective criteria and tying or to org cycle helped work with individuals from changing way we are all thinking, and instead change specific process

-

Applied logic to employee journey

-

Example of performance review process at organization

— So where would you focus next, in the context of the employee journey:

-

We asked ourselves: Where do we see high likelihood of bias?

-

The more individuals involved as opposed to processes, more likely bias

-

So we looked at journey where HR was not in charge of process

-

— So we focused on performance reviews, where lot of bias existed

-

Literature found global ratings is a petri dish for bias, due to

-

1) Confirmation bias with notions based on characteristics and traits, and paying attention to those

-

2) People relying on potential impact only for one group (‘white men’), while others are asked to perform and provide results

-

3) Performance reviews aslo had long-term and short-term

-

Short-term: Performance reviews played part in what bonus was and compensation package for the year

-

Long-term: Evaluation tied to career opportunities inside and outside the org

-

-

— So we introduced evidence based performance reviews through a workshop and introduce process that would remove bias from feedback discussions as much as possible

-

Focus on minimizing bias from conversation

— Introduced three principles for managers and team members

- Give 3 pieces of concrete evidence for feedback you give for individual

- If asked, is this a trend, you have lot of info about individual, and more constructive criticism

- It also minimizes halo/horn effect as white men who do one thing right have everything else cast as positive,

- While for BIPOC workers, doing one thing wrong is a black-mark against you, whatever else you do

— Not enough for people to look at own feedback and eliminate bias from conversation themselves

-

Instead we ask managers to audit feedback and take what you learn to see bias

— Performance reviews based on research and examples based on workshop and examining biased feedback

— Managers audited individual pieces of feedback, and then audited all feedback to look for tends and outliers

-

This is way to see where bias slips in

-

Manager with three individuals and noticed one stood out

-

Look at characteristics and probably can see bias at play

-

— Performance review conversations to be constuctive by following up with reviewers and making it dynamic

-

Go back, and without three pieces of evidence, ask if there are other times behavior showed up?

-

Consider other critical context you are missing

-

Pay attention to trends, and have conversations with people

-

— We introduced workshop to move from looking at personality-driven moments in time ,to make performance reviews to be constructive

-

Concrete evidence based criticism let us take performance reviews

— People thought this was incredibly successful workshop compared to others

— Asked participants to do opposite of introspection, but instead…

— We asked them to audit other people’s feedback and look at others and bringing anti-bias lens to tthem

— The results?

-

Empowered team members to call out bias. Opened up performance reviews for potential bias

-

Held managers to a system for reviews

-

“The liked how objective criteria was taken to heart, and used empirical data to back up claims, and empowered people to do this themselves”

-

— We had different idea of success

-

Checklists to objective criteria and organziational cycles

— We are not fully there for accountability

-

We still need to tie performance to incentives and disincentives

— We are eager to hear your thoughts on accountability

— We also have another request

-

Want to scale practice byeond and link all resources with session to help you find pieces of the org cycle that are relevant to you, as well as associated checklists

— To sum up:

-

Checklists moved needle as they were different from free-form discussions and centered facts in objective criteria and evidence

-

Got around table and come to mutual agreement

-

Not impacted by individual bias and can make conversations where bias wouldn’t impact end result

— In conclusion, forget hearts and minds and don’t go chasing waterfalls

-

Disrupt your org with checklists

FAQ

-

Can you share version of cheat sheet? Looks amazing.

-

Yes, can share template used, but if you have competencies and criterias, do that first. The cheat sheet is a playbook

-

Link will be as Google Drive in request for resources.

-

-

-

Is the hiring cheat sheet before interviews or after? Does panel know beforehand?

-

Yes, goes out to the entire panel, and have FigJam to take notes and gut check on non-negotiables

-

Used to help write job description itself beyond strict interview space

-

-

Story behind the matching citrus shirts in the call?

-

No story, we just decided on matching citrus shirts

-