Day 2-AI as a Design Partner: How to Get the Most Out of AI Tools to Scale Your Process

— I’m excited to be here and talking about AI as design partner and how to get the most out of tools to scale processes

-

Sr. Product Designer at Google

— I think about designing systems at scale, and have worked at health companies and Google products like Nest

— This will be a deep dive into designing for all at scale, and systematizing user journeys for all users through AI sprint methods

–Will also discuss difference between augmentation and automation of process

— Deep dive on using generative AI to design inclusively at scale

— I’ll give a brief overview about generative AI systems

-

Trained on mass amounts of data to learn patterns of human language to generate new and coherent meaningful content

— Why should we care though?

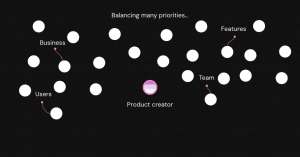

— As a product creator you can be product manager, researcher, designer, or anyone involved in digital product creation

— You are often balancing many priorities from features to users, business, and partners

— Things fall through the cracks though with manual efforts, whether features or users not accounted for

— As a creator, you can leverage AI to design for all to be more systematic in process

— So what is product inclusion and equity?

-

You are designing for everyone and centering the most marginalized voices

-

Building at every phase of product creation process

— You are building on the right to belong to be more systematic, as opposed to more manual process

— This sounds great, but how to apply systematic product design process?

— Three pillars exist:

-

Documentation through user flows and journeys

-

Ideation through workshops or design sprints

-

Synthesis, with organizing and prioritizing

— Can be more systematic in speed and execution

— Can design more intentionally to avoid exclusion

— Product inclusion will be more important as well, and can actively promote diversity and inclusion

— So let’s review some data:

-

1/7 of people have disability in world population

— 1/5 of world population will be people older than 50

— There can be up to 700 million with disabling hearing loss by 2050 and we need to design for these users

-

Typically design with average users in mind

-

But people with many abilities to design for, and could be big part of population

— So as creators know we need to design for everyone

— Situational disabilities can be things like cooking, speaking different languages

— Can also be permanent can be color blindness or hand tremors

— We also design for multi-modality to use products on TVs, mobile devices, and having scalable experience across all devices

— So what happens when we don’t have these considerations?

— See recent news headlines for consequences of not accounting for women or minorities, or big misses in accessibility

— So how to systematize critical user journeys?

— We can use dimensions of identity as set of hidden or visible that define who we are, how we think, and how we interact with others

— These identities intersect and create more complex identities

-

Intersectionality is what this is called

— We can use AI to do the following

-

Write inclusive user flows

-

Consider intersectionality with personas

-

Define complex identities for our users

— So let’s beak down inclusive user journeys

-

For example: As a low vision users, I can complete device set-up in < 30 seconds

— This can be written across dimensions of identity

— A critical journey can be broken down to “As a…task”

— Will use sample PawPaw dog-walking app to model example going forward

— Follow-along by opening up conversational AI tool for your choice and break down good prompt design

— Prompt design

-

Tasks/Steps: Describing the guide to mapping out the experience

-

Persona: Who do we want model to be?

-

Provide Examples: Tell model what you are looking for

-

Constraints: Prime model to understand constraints of ask

-

Chain: Refining original ask for more detail and original ideas through more constraints or examples

— Will show some examples using Bard, but you can use other tools

— I’ll ask the large language model (LLM) to list out several dimensions of identity to use in the next exercise, with one word answers

— Use first prompt to frame your request

-

i.e. Description of PawPaw app

— We can use the prompt as reference rewrite user journey and write user journey for each dimension

-

PawPaw app for people near me, and search for available dog walkers nearby

— Can then generate better prompt that is incredibly detailed and specifies the nature of the ask

— Dimension of identity can pull out a list, and use list with five different examples or 100s of examples

— Ask the LLM to generate creative tasks based on a chosen identity

— You can repeat this with other dimensions of identity, like age and attributes, to create a relevant user stories for it

— In example, I asked the model to create dimensions, and provided coaching at the end

-

Put info in table to read and primed product with user journey

— Results of user journeys were helpful and others missed the mark, so don’t be afraid to refine original ask

— This approach can be used to scale other types of prompt framing for other users modalities or user types

— Can frame multi-modality, temporary disabilities, situational disabilities, or permanent disabilities

— Showing example of permanent disabilities, temporary disabilities, for product prompt and using exact product for reference and replacing dimensions of identity with things like broken bones, sprains and strains

— Can also leverage AI for HMW statements

— HMW work to reframe insights to opportunity areas

— HMW move from clicks or taps

— Using LLM to focus on specific business priorities like inclusive design, fewer clicks to find a dog walker, multi-modal design and ideas that are refined

— Another example is affinity clustering to take large amounts of ideas and finding common themes, through a design sprint process

-

This workshop can take hours

-

Generative AI can take minutes

— Able to define to five different themes on the left

— Can try abstraction laddering to move from concrete details to abstract concepts, to solve a problem

-

Using ‘why’ to get more abstract

— Can use LLM and example of abstraction laddering, and priming it for easy scheduling on PawPaw app, and can prime the process for refining it later

— Let’s talk through augmenting versus automating

— LLMs are not 100% perfect and we need to stress-test the output

— There can be bias in the LLM such as generating images that are based on white people and gender bias and occupational bias

-

So we need to augment not automate

— Finally, putting it together

-

We enter with intent to scale user journeys using dimensions of identity and have scaled output through a LLM

— This approach also works with Abstract Laddering or leveraging How Might We (HMW) statements for scaled output

— Talked through systematizing user journeys to scale all users

-

This is augmenting, but not automating that process

— Can scale to other design sprint methods

— Remember good prompt structure and adjust

-

If something doesn’t work initially, it doesn’t mean that it doesn’t work at all

-

Continue to refine prompt and outputs

Q&A

-

How are critical user journeys with such a vast user base?

-

When looking for user journey and usage, frame it out around user types and who we are designing for.

-

Can be overwhelming, but GenAI tool can write user journey for users in minutes

-

Try method for designing at scale

-

-

-

Will ChatGPT learn from itself for questions on DEI?

-

LLM is learning from itself, but datasets are trained on biased data– so make sure you are testing it.

-

You can approve or disapprove of an output, so use that to train the models

-

In process of getting better, so keep as augmentation not automation

-

-

-

If trying to scale in other languages, what to keep in mind?

-

Depends on the language and some verb structure is different

-

Put in brackets and model replacements

-

-

Library of collected prompts to use?

-

Don’t have anything published, but will let you know

-

-

Downsides to approach outside of existing biased data?

-

Will get massive amount of info to sift through

-

Next, what to do with information and how to design for a 100 things

-

Need to define it and tools to synthesize and shorter list to design for

-

-

-

How to marry techniques with UXR?

-

Depends on UXR, and more inclusive UXR can be done to take user journeys and recruit those users to study with and account for them

-

Design for people who are blind or neuro-diverse

-

-

Should managing prompt libraries be DesignOps responsibility?

-

Can be good one

-

Don’t need to manage library but list of ideas on how to do collaborative design sprints and can create own list to share with team and own design sprint methods

-

If it works once for you, you can keep using it

-

-