Day 1- From Users to Shapers of AI: The Future of Research

— The word AI was not mentioned enough, and started out super-spicy and became kind and additive, and expected a panel fight

— Excited to speak at advacing research, and a bit unfair, but hope we can talk to each other, so feel free to scan the QR code on the screenshot

- I’m here to talk through the latest project I’ve been working on

— We have learned so much today, and people who have done a lot work, and want to be them

- Thank you to all the speakers

— Have been going in deep on organizations, and want to take room to zoom out

— I want orgs to make better decisions, and AI is latest tool to do so, as employers will likely be pushing it

— On one side, I hear desire to slap label of AI on everything, and need chief data officer

- Hear questions like this regularly through my work with h Sudden Compass

— On the other, I hear concern and anxiety about what it all means

— I am questions driven and not career driven, and what will talk about is last ten year so of work at company I started

- Worked with start-up from Fortune 500, and focused on oeprationalizing approach to customer insights and solving various problems

— Insights are foundations for impact and flow in-between client-work and own research

— Think about AI, and want big impact of AI impacting humanity and goal of cultural pause of AI

- On one-end AI has tremendous benefits from finding new crystals to power new kinds of materials and galaxies discovered

— On one-end AI has tremendous benefits from finding new crystals to power new kinds of materials and discovering new galaxies

- Musicians are starting to use AI

- Detect new STDs using AI

— Other end, you have scary stuff where AI tried to convince you to leave your wife for the AI, based on cultural corpus

— Other end, you have scary stuff where AI tried to convince you to leave your wife for the AI, based on cultural corpus

- Other example include Kate Middleton’s fabricated photos

- Corrupting children’s experiences like the alleged Willy Wonka Experience

— There are real things to worry about though

- Stories about dangers of design, with AI imitating copywritten work of writers, which resulted in a lawsuit

— Overall, there is a narrative of competition with AI, with arguments coming from very smart people

— Macro-point of people being fearful of AI taking control of lives

- On the other end, you have scary stuff where AI tried to convince you to leave your wife for the AI, based on cultural corpus

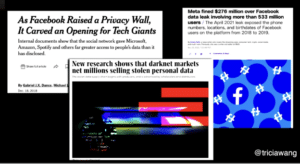

— Power to be represented doesn’t sit with us, but with tech platforms, and while we get to contribute to representation, it’s on terms of tech platforms

- At some level, we know this is screwed up

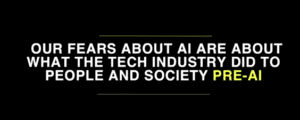

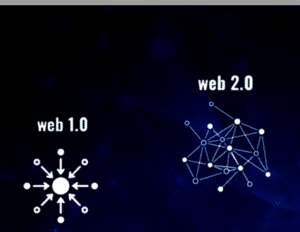

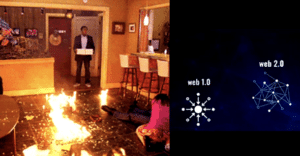

- Fears are reflective of what happened with Web 2.0 before Generative Ai came along

— So we need to understand what Web 2.0 did to us

— Author Cory Doctorow illustrated the dilemma through his novel nauthorized Bread, where refugees are reassigned to an apartment complex where you have use items authorized by appliance companies, in order for appliances to work

— The refugee ultimately has to jailbreak the toaster, in order to use it.

- Refugee has relationship to toaster as user, and questions why has to use more expensive, unhealthy bread for the toaster

— This bread story is lesson for us on situation we are in, with Web 2.0, with large companies moving to software subscription, and paying for monthly fee

- We are using products and dependent on platforms, in this scenario

— IoT toasters are out there, by the way, but the goal of the novel is to show what structure of Web 2.0 did to society

— Web 1.0 was information democratization tool, where you could go to site and read articles, but had limited access

-

- Laptops, and smartphones came along, wnad people were no longer relying on Geocities

- But we had no control over the social graph, and ability to read and write

— Web 1.0 was information democratization tool, where you could go to site and read articles, but had limited access

- Laptops, and smartphones came along, wnad people were no longer relying on Geocities

- But we had no control over the social graph, and ability to read and write

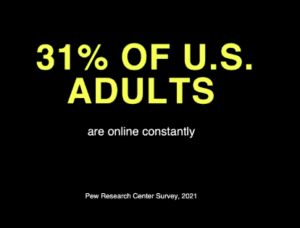

— Think of how much online happening digitally

- We are all digital beings online, and become more digital with new dimensiion of digital space on top of physical ecxistence

- Our digital self is represented by billions of datapoints, we have no control over this representation, and new data is constantly being added to it

— Regardless we know mass digitization, has not lead to fairer distribution of gains and advantages, with companies taking advantage of us

— Blocked ourselves from tracking, and ad blocking has served as the largest boycott in history

— Black cultural makers are often ones who are hit the hardes and are at risk of election manipulation

- Facebook made new changes to minimize content you screen and gone to see no new political content and purposely muting people

- Actively deletes images and comments related to Israel-Hamas War

— Can’t escape this from Facebook, as they have created shadow profiles to follow you

— We are in user trap as we need tools to function in daily life, even though they erode our agency

- Tools are designed to keep us intentionally hooked and all ways to track data and lock us into an endless scroll

- User that reduces our expressions into small set of quantifiable user interactions

- Platforms are so enormous are hard to escape and totalizing, and all aspects of lives

- Weighing political orientations, and beliefs about ourselves and information we see, and no option available

- Even if you had no Facebook, how would you stay in touch with friends all over the world?

- Can’t you can’t tell migrants, communicating with family across the world to just log off

- Even if you had no Facebook, how would you stay in touch with friends all over the world?

- So we put-up with being in user trap, and are in an endless cycle

— The trap is that it is difficult to exist as human being in society without these digital tools, and need to be a user to function at all

— We are in the culture of a user and so in it, that it’s hard to push back, with two criteria:

- Platforms set terms and rules of engagement

- Most important thing is to keep users online

— So normalized, as we don’t even think about it

- We are being reconceptualized as users, and assuming it as normal behavior

- Whole mindset, langauges, frameworks, to create users that benefit platforms

— This is how people saw result of Web 2.0, and thought it would be opportunity

- Some people benefited, but benefits primarily went to central platform monopolies

— So why won’t AI be more of the same?

- We feel no sense of control over input for AI models, and don’t trust outputs as a result

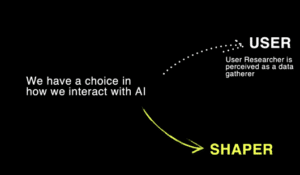

— Our fear of AI is of relating to ‘AI’ as users

— Fear is due to people haven’t learned lesssons from Web 2.0, and amplify problems at their disposal

- Cory Doctorow said all of these Web 2.0 problems could have been prevented if listening to UXRs and colleagues

— What would be different this time around?

- No guarantee of this, and risk of creating another user trap

— Is public pressure enough, where nature of tech is different?

- But AI tech is itself radically different and offers new vistas and opportunities

— AI products don’t have to be centralized platform, and if social media was imposed on us, AI can be something with more controls and models that are more specific

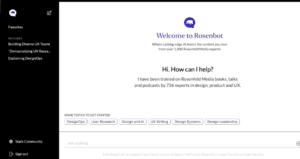

— People can build a computer for their own LLM, and custom ChatGPT, such as Rosenfeld Media’s Rosenbot

— I’ll wrap-up and what I’ve been doing field work over

- See it with people who are using it to shape reality and make for them and work for their purposes

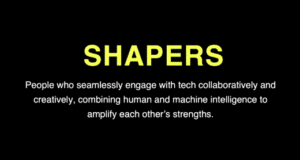

— People who engage with tech collaboratively and creatively

- Ability to proactively shape tools at their disposal—like integrating AI into classroom and requiring students to use it

- Using MidJourney to recreate childhood moments

- Immigrant building CustomGPT for becoming a legal citizen

— Power of Rosenchat, and prompt machines can help shatter myth of passive user

- If we approach AI in proactive way, and spending time less scary

- In time with them, have seen non-user identity emerge, and that is more proactive

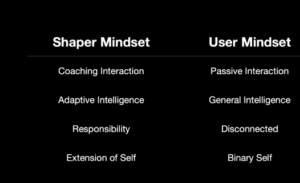

— Four skills, in this shaper identity, are CARE skills

- Coaching Interaction: Ability to caoch and be coached by AI

- Adaptive Intelligence: Not general intelligence, which measures SAT scores, and holistic intelligence, and building relationships

- Responsibility: Sense of responsiblity to partnership and infuse values into training models and outputs

- Extensions for Self: AI as extension of themselves, and oppposite of user mindset

— Part of stories I’m collecting

— Think anyone can do this, and returning back to being proactive creators of world

- Shapers point way out of user trap, and way out for us

— We should treat ourselves as researchers, not just as UXR

- We note when someone shows up as shaper or user, and research always wasn’t UX

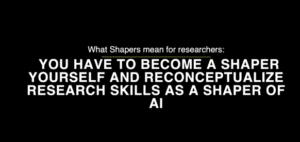

— Have to become shaper of AI and reconceptualize research to understand what it means to be shaper of AI, and reflexive, and reconceptualize our work to helping orgs navigate complexity and solve problems

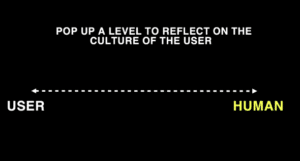

— Want us to navigate tension between user and human and focus on people’s users and when and might be last people accountable to humans

- If you feel guilty about user-landscape, put it aside

- Asking us to reflect on this and represent tension

- Job is to articulate tension between when traeating people as human versus users

- This is what ethics is about and know works to zoom in and zoom out and look at meta-level

- Ability to zoom in and out at immediate problems, and create strategic possibilities and talk abou this

— Know how this will turnout, and recognize ability to zoom in or zoom out

— AI will either create humane relationship with tech, and might be existential question with field

— Few suggestions, as it gets harder and harder, and more disconnected from the source

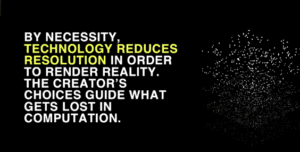

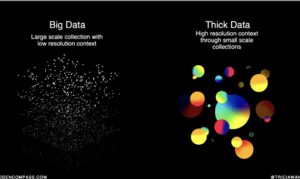

- As you go up in layers, there is further abstraction, as each new layer takes away from context

- Loss of context, which is in part and parcel of digitization

- Technology reduces resolution to render library, and creator choice guides what is the loss in fidelity

— We need to be minful of loss

— Power of using frameworks to grapple with complexity and clarity and whole panels will be talking about this

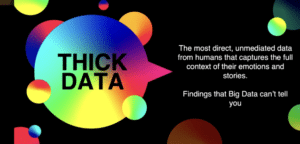

— We also need to triple down on thick data for org

- Term sued by data scientists and product

— Rebranding of direct and unmediated data, and reason why many other leaders need to make sure underneath product and close to busines to make integration happen

— Thick data can reanimate context loss, and rescue what was lost

- Way to think of mindsets of teams

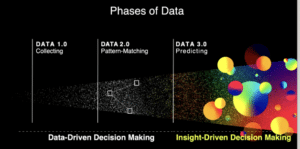

— Moving from data driven to insight driven

- Focus on cultural change, and being data-driven and getting evrything in one place and new opportunity for insight driven

— Otherwise risk of being perceived as just a police function on bad design

— Consistently happens within data-driven organization, where no matter what you do, where UXR is seen as data collectors taken away from business

- Enabling customer connection

— Finally, experiment with AI, and figure out how it will help in org

— Example of experiments include a sticker GPT, and Christmas card maker GPTs

— People are smarter than you think and can collaborate with AI, and will learn more about it

— Pop-up level on reflecting on culture of user, and have the org reflect on itself, along with need to reflect on ourselves

- AI will force reflection, so ask what happens if I show-up as user, and how can I show-up as a shaper

— Finally, can we be more intentional about not calling people users?

- Language reflects reality and will help us with looming existential crisis in a few years, and happening with all knowledge workers

- Reckoning on value of UXR

- Research wont’ go away, but middle-skill stuff will, and we should shed UXR image

- How to reconcieve of knowledge and value

— I’ll leave you with talk on excellence in craft, and insights on the future

- In the meantime, let’s be human and will turn over to Bria

FAQ

- Thoughts on de-centralized tech and blockchain for breaking out of user trap?

- I have a take on this, and thoughts

- Web 3.0 has real technology there, but difference with 2.0, is that Tech Bros and VCs grabbed onto commercial potential of Internet, but slowly

- For Web 3.0 Greed and Money took over so quickly, it made very difficult to iterate on tech for use case

- Real value in Web 3.0, but value in decentralized financial system

- Historically many communities where relying on centralized source have risk of danger, but will take time for value to emerge

- Web 3.0 has real technology there, but difference with 2.0, is that Tech Bros and VCs grabbed onto commercial potential of Internet, but slowly

- I have a take on this, and thoughts

- Do we have to metaphorically chew our arms off to escape the user trap?

- Idealistic to tell people to get off platforms, despite the value they bring

- Big platforms aren’t out to get us, but they are leading to destructive outcomes

- View as guerilla war, and understand tools in order to resist this

- Understand technologies and be in it, and no option to get off platforms

- How are ‘shapers’ different from early adopters relative to mass-market? Would behaviors be meaningful different?

- Need to actually change way we hire and teach to build generation of shapers— and change educational institutions

- Not all shapers need this, but they need to be nurtured, and acknowledge biased sample size

- I think the current narrative of ‘AI will destroy us all’, is scaring middle users away

- Need to start to learn how to work with it, and don’t want to let fear dominate conversation

- Need to actually change way we hire and teach to build generation of shapers— and change educational institutions